AV-Racer Devlog (4): Writing a low-level immediate-mode renderer

Friday, August 05, 2022

est. reading time: 16 minutes

Note: The API in question is in open source and is available here. There, you can find the implementation of functions mentioned in this article.

When I started learning how to write code I always wanted to make games. But taking up such large a task

required simplifying the approach to things. Still wanting to write things in C, however, and to not use an

engine like Unity or UE, I resorted to using simpler rendering APIs. SDL2 was a great solution for a beginner.

It provided a simple yet robust rendering functions, which was good enough for the small scale of things I

started with. It was easy to set up and easy to use.

However, as the games grew larger, I was faced with the limitations of SDL2. I had to understand how GPUs worked

and why calling

SDL_RenderCopy() on each of two thousand sprites was a bad idea. SDL2 communicated

with the GPU with a draw call on every render call. There was no way to bunch things up and send them to the GPU

at once. This was a huge performance limitation. There was also the lack of shaders, which crippled any plan to add

post processing effects. And then there was the limitation of rendering in 3D. SDL2 really wasn't a viable

solution from this point on. I considered software rendering, which while certainly interesting for educational

purposes, wasn't a scalable option for releasing games I had in mind. So I turned to

OpenGL.

To clarify, I decided to move away from SDL's render API, but not SDL itself. Since it offers a platform layer that handles window creating and event polling, it is still useful to use. What I moved away from is the SDL renderer and used OpenGL to communicate with the GPU while still using SDL to handle the window and platform management.

OpenGL allows low-level communication with the GPU and enough control to be useable for a large project. It took

a while to migrate my game's rendering pipeline from SDL2 to the OpenGL, as I had no fixed framework on how to

organize things. So by the next game, I had to start from scratch again. The problem with prototyping is that

you'd want to get something working as quick as possible. So resorting to SDL2 at first was a bad but

understandable habit. By the time I was halfway through AV-Racer, I was still using SDL2. I was planning to

migrate to OpenGL, for the third time now. I realized I needed to abstract things to a standalone library I can

immediately start with anywhere.

That is where the concept of

SimplyRend came from. Make a small library with few dependencies that can be easily

imported into any project, allowing me to get up and running with a solid groundwork I can always build upon

without having to reinvent the wheel every time.

The general algorithm

Before going into the details of the code and functions, it is important to lay out the general approach of how

the renderer works.

Immediate-mode is

a term that describes graphics rendering algorithms where render information are not stored statically but

rather called "immediately" in different parts of the code. In this API, what it means is that we can put a

render call anywhere in the code and by the end of the loop all render calls would be organized and passed to

the GPU. So we aren't restrained to organizing where render calls are in code, as long as the calls are in

order. This is the general approach of the library.

What this means is that we need a framework that has lists of render commands for every frame; a list that could

also change every frame. During the loop we push rendering commands onto the lists without really communicating

with the GPU. Then, at the end of the frame, we organize the commands and send them to the GPU in groups to limit

the number of draw calls. The library should also support other convenient extensions by design; like a built-in

sprite importing and animation rendering system and support for font packing and rendering.

The library gets initiated and the lists get allocated on the heap, then graphical and font assets get loaded,

packed into textures, and shaders and render targets get defined. All of that happening at load time before the

main loop. Then the loop initializes frames and starts with empty lists at every frame, collecting render data

from render calls. At the end of the frame, the lists are organized and sent to the GPU. Then the frame gets

drawn. The loop starts again. This is the general algorithm. It is important to note that SimplyRend is designed

with 2D engines in mind, 3D frameworks require different approaches.

Now that we've gone through the general idea, we can go into detail on how that is done, starting with the

backend:

Data structures

Starting any OpenGL project, you are faced with a lot of set up before you are able to render the proverbial

"Hello Triangle". You have to generate certain buffers and attributes, assign information to them, create

shaders and programs and compile them, all before rendering anything. Most of that process is repetitive as

well. Primitives like basic rectangles, filled, framed, and textured, are universal in their properties. They

can be abstracted alongside the set up process into default rendering frameworks.

Let's take a look at the data structures within the library. The renderer uses a global context, declared with

the library. It is important to note that the data of the following structures are handled internally, as the

front end user only deals with function calls and pointers returned by them:

Primitives

Since we are setting up a renderer for a 2D framework, we are mostly working with rectangles. The OpenGL API

works with triangles. We can, therefore, define each rectangle with two triangles, and abstract that into a

singular structure of a rectangle which includes 6 points. Then we store an array of these rectangles, alongside

the vertex buffer object and vertex array object, which are relevant for OpenGL. We wrap all of that in a single

structure, stored in the global context.

struct SR_Vertex

{

v2 P;

v2 UV;

SR_Color C;

float Z;

};

struct SR_ObjectRects

{

struct glrect

{

SR_Vertex V[6];

};

GLuint VBO = 0;

GLuint VAO = 0;

glrect *R;

int *render_layer_index;

int Count;

};

SimplyRend uses 2D vector math 'v2' from my growing math library

Emaths. But since OpenGL is a 3D API, the Z component of the vectors is used to order render objects in 2D. And since SimplyRend uses 2D vectors, the z component is defined separately.

SR_Color is a 4D vector that holds the values of red, green, blue and alpha.

UV defines the UV coordinates of the texture.

This structure is used as the standard for point data.

glrect wraps 6 of these and an array of these rectangles in an object.

Count stores the number of defined rectangles.

render_layer_index is a bookmark array that will be explained later.

Another important structure is the one used to draw lines, it will also be used to render framed rectangles. Its lines will each hold two points. So we define:

struct glline

{

SR_Vertex V[2];

};

Render layers

The rendering system in SimplyRend revolves around render layers. A render layer in this context is an abstraction of render calls within common conditions, such as: A shared texture, specific target buffers, a special post-processing effect to be applied on the layer, or a cropped viewport. Another reason to use layers is to manually separate physical layers of rendered objects to guarantee correct render order and prevent alpha blending bugs. Since SimplyRend is designed with 2D in mind, it takes care of Z ordering on the backend, and provides render layers as a function to separate render group.

I, however, am contemplating changing the system and allowing for direct Z ordering, which will eliminate the need for consequent render calls existing now. This blog will cover the current system existing in AV-Racer as I shipped it back in May 2022, for now.

struct SR_RenderLayer

{

struct scissor

{

bool on = false;

SR_Rect rect;

};

struct postprocess

{

bool on = false;

SR_Target *Origin;

SR_Target *Target;

SR_Program *Program;

};

bool clear = false;

SR_Color ClearColor;

SR_Target *Target;

scissor Clip;

postprocess PP;

SR_Texture *texture;

};

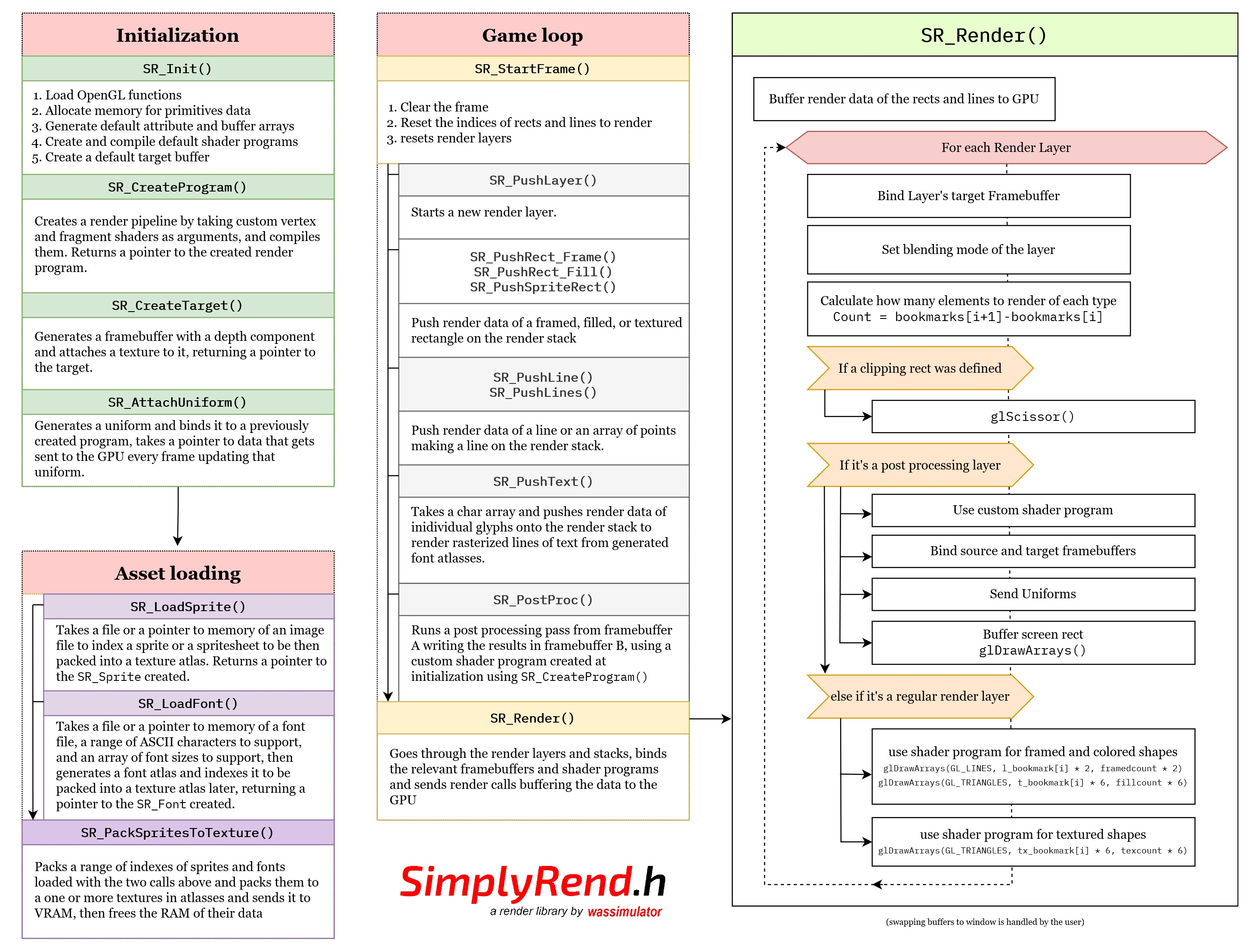

The implementation follows three main categories: initialization, loading assets, and the game loop. We initialize the library and set up our rendering pipelines and load all assets before the loop, then we call the relevant render functions during the loop.

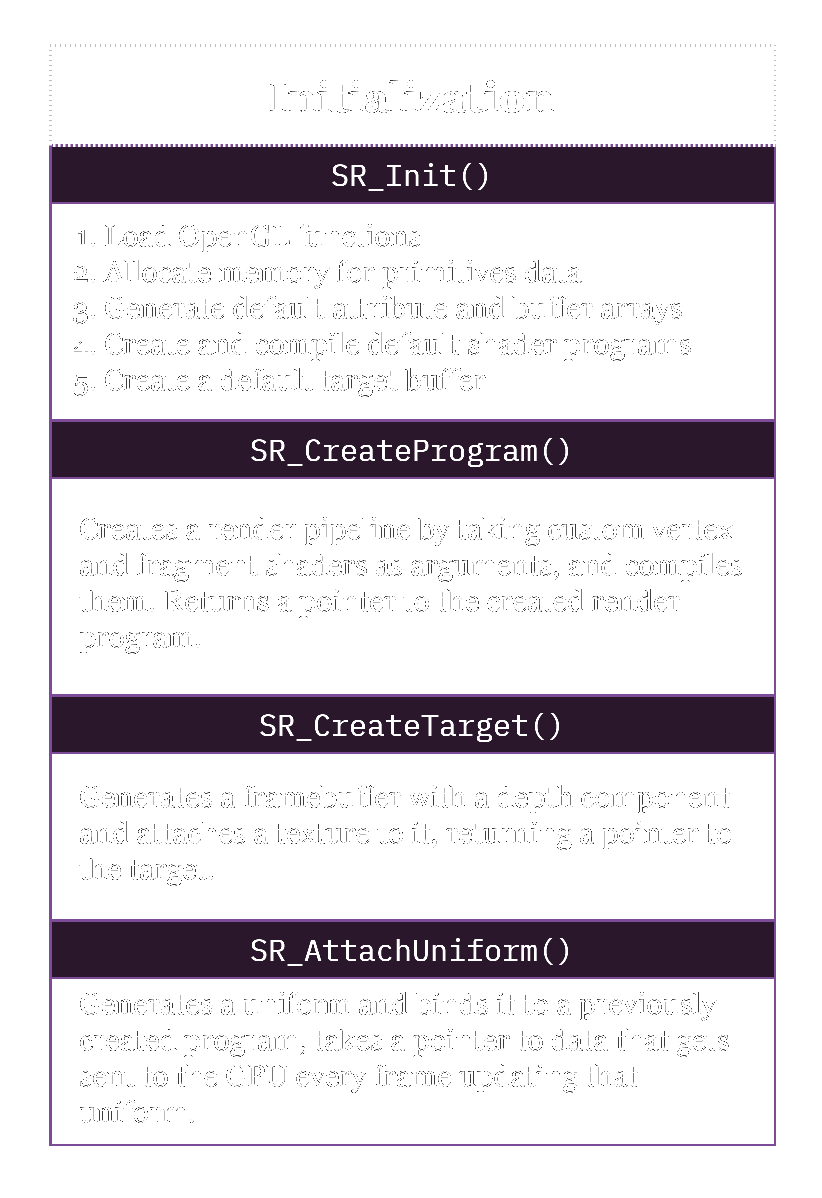

Initialization

Having established these basics we can run the initialization function.

SR_Init() initializes the the OpenGL context and allocates the RAM memory for the vertex data. Since that data is allocated once at the beginning of the program, SimplyRend defines maximum rect numbers. Which, in retrospect, can be wasteful if not planned well. An improvement can be made towards dynamic allocation in the future.

Next, we generate vertex attribute arrays and buffer arrays describing our data structure layout, and create our basic pipelines. Here we need two, one for rendering colored and framed rectangles and lines, and one for rendering textured rectangles. Since these are almost always the same with minimal change from one project to the next, the library has these two pipelines built-in.

To handle render data on the CPU with ease, the converting algorithm from pixel to GL space is moved to the vertex shader, which makes rescaling simpler. The current version of SimplyRend does not support arbitrary aspect ratios. It was built around a game that has a static 16:9 UI and so everything was logically written in that aspect ratio and then transformed to the correct scaled size in the shaders. Though supporting arbitrary aspect ratios should be easy to implement, changing the way I wrote the UI for AV-Racer post facto would be much more tedious.

Here is the relevant converting function in the vertex shader that take pixel coordinates and converts them to OpenGL coordinates [-1,1] while accounting for the frame width and height, which are built-in uniforms passed by the library:

vec2 Pixel2GL(vec2 P)

{

vec2 Res = P / vec2(FW, FH);

Res = Res * 2 - 1;

Res.y *= -1;

return Res;

}

In the fragment shader of the textured rects I incorporated a smoothstep function to account for texture filtering in scaled pixel art (a problem still persistent in Unity).

After writing the shaders we can create the pipelines. SimplyRend abstracts render program creation to a one function, which creates and compiles the shader program and returns a pointer to a struct that wraps all the relevant data under one object. In the initialization the first two programs are reserved internally for colored and textured primitives respectively. Then we create a render target, which defines the main screen buffer as the default target. This distinct target creation makes manipulating the screen buffer with post processing passes easier.

After calling the initialization function, the user can then call SR_CreateProgram() to create custom post processing shaders. Then use SR_AttachUniform() to generate and bind data on the RAM with uniforms passed to the GPU automatically whenever the relevant program is used. After setting these up, post processing passes can be done with singular calls in the loop. Here is an example of how these functions are used, the following is from the source code of AV-Racer:

shaders* S = &G->Graphics.Shaders.S;

uniforms* U = &G->Graphics.Shaders.Uniforms;

S->pixel_shader.P = SR_CreateProgram(CustomShaders[0], CustomShaders[1]);

S->crt_shader.P = SR_CreateProgram(CustomShaders[0], CustomShaders[2]);

S->distort_shader.P = SR_CreateProgram(CustomShaders[0], CustomShaders[3]);

S->CRTon_shader.P = SR_CreateProgram(CustomShaders[0], CustomShaders[4]);

S->Static_shader.P = SR_CreateProgram(CustomShaders[0], CustomShaders[5]);

S->Smoke_shader.P = SR_CreateProgram(CustomShaders[0], CustomShaders[6]);

SR_AttachUniform(S->Static_shader.P , 10, &U->iTime , 1, SR_UNIFORM_FLOAT);

SR_AttachUniform(S->distort_shader.P, 10, &U->iTime , 1, SR_UNIFORM_FLOAT);

SR_AttachUniform(S->distort_shader.P, 11, &U->VertMove , 1, SR_UNIFORM_FLOAT);

SR_AttachUniform(S->distort_shader.P, 12, &U->Noise , 1, SR_UNIFORM_FLOAT);

SR_AttachUniform(S->distort_shader.P, 13, &U->Fuzz , 1, SR_UNIFORM_FLOAT);

SR_AttachUniform(S->distort_shader.P, 14, &U->CRT_Intensity , 1, SR_UNIFORM_FLOAT);

SR_AttachUniform(S->pixel_shader.P , 10, &U->ColorDevide , 1, SR_UNIFORM_INT );

SR_AttachUniform(S->pixel_shader.P , 11, &U->PixelScale , 1, SR_UNIFORM_FLOAT);

SR_AttachUniform(S->CRTon_shader.P , 10, &U->CrtOn , 1, SR_UNIFORM_INT );

SR_AttachUniform(S->CRTon_shader.P , 11, &U->CrtOn_time , 1, SR_UNIFORM_FLOAT);

SR_AttachUniform(S->crt_shader.P , 10, &U->CRT_Intensity , 1, SR_UNIFORM_FLOAT);

SR_AttachUniform(S->crt_shader.P , 11, &U->CRT_Curve , 1, SR_UNIFORM_FLOAT);

The game uses 6 different custom shaders to make the post processing effects and here we create them and generate and bind uniforms with relevant data we update in the loop. The second argument in the

SR_AttachUniform() calls is the explicit location of the uniform in the GLSL code, as

glGetUniformLocation() was problematic on AMD drivers, among other things. So the library expects custom shaders to have explicit declaration of uniform locations in them.

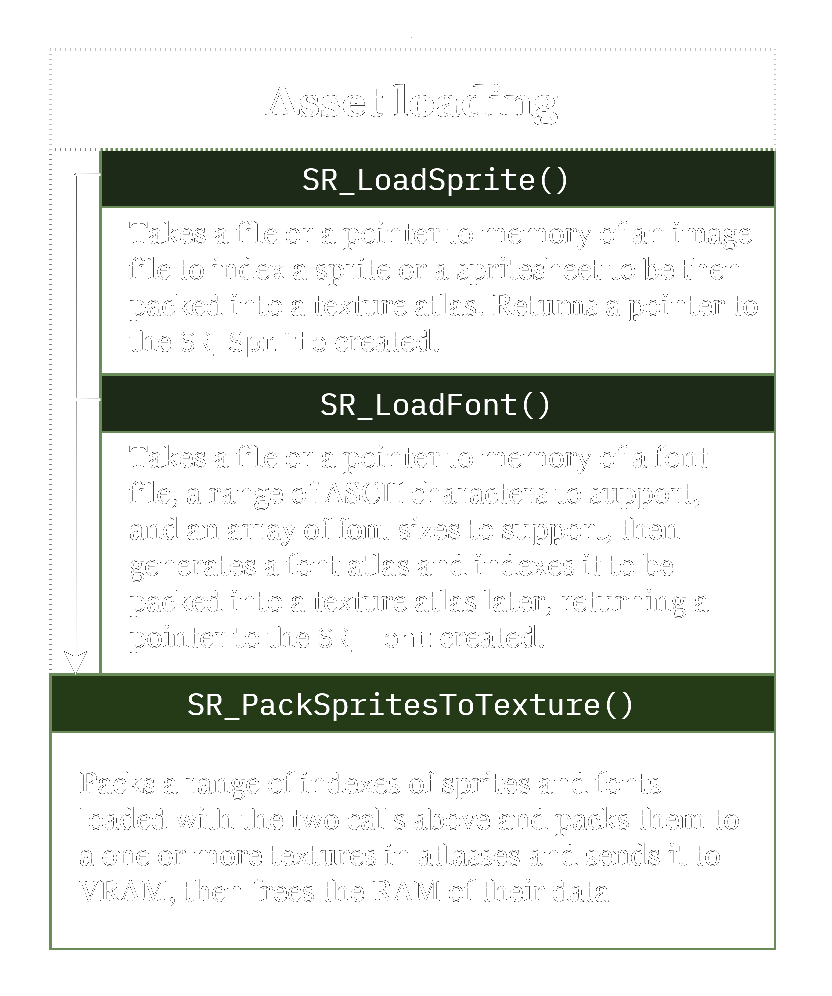

Asset loading

After initializing the library, there are built-in functions that can be used to streamline asset loading. Since SimplyRend was made for 2D games, rendering things on the screen revolves around the concept of 'Sprites', the asset loading step consists of streamlining the process of creating texture atlases from individual small images. This works fine for a small game like in AV-Racer, since the time it takes to pack and generate the textures is negligible, but making large games that have a non trivial amount of textures may benefit from generating those atlases beforehand and shipping them with the game. Rather than ask the game to do that every time it loads. But I am here to tell you on how the current version works so let's stick to that.

The data structures are organized on the heap such that there is an array of sprite structs within the SimplyRend context. Every time we call

SR_LoadSprite() the function decodes the file to pixel data, copies that data to RAM, fills in relevant metadata like width and height, all in the

Nth element of the sprite array and returns a pointed of that element, advancing the

N by 1.

typedef struct SR_Sprite

{

int w, h, n;

int x, y;

SR_Texture *texture;

unsigned char *PixelsRGBA;

SR_Color ModColor;

int frames;

bool animated;

int frame_i = 0;

int ID = 0;

} SR_Sprite;

Another painful endeavor I had to go through every time I started a new game project was to make a good text renderer. At first I used to use SDL_TTF but that library rasterized the text at runtime. This was not performant. Next I tried creating font atlases and using SDL_RenderCopy on each glyph. That was even worse. In the end what you really needed to do was preloaded font textures and have a large number of glyph data sent to the GPU in a single call, in order to have anything decently performant. So SimplyRend does that. At first the library uses Sean Barrett's stb_truetype to create font atlases and then it packs that atlas as a custom sprite. The metadata of the font is then stored and used at runtime for render calls. Same as with sprites, the library's main context struct holds an array of font structs. SR_LoadFont() returns a pointer to the Nth element of the array.

typedef struct SR_Font

{

SR_uint ID;

SR_Sprite *Sprite;

int *sizes;

stbtt_packedchar **CharData;

int CharCount;

int SizesCount;

int First;

unsigned char *Pixels;

stbtt_fontinfo Info;

stbtt_pack_context PackContex;

SR_Texture *Atlas;

int W, H;

} SR_Font;

After loading sprites and fonts, the pixel data is all stored in the RAM. The next step is to send that data to the GPU as textures. To minimize state changes, sprites, sprite sheets, and font atlases can all be packed on one or more large textures, which can then be sent to the GPU.

SR_PackSpritesToTexture() goes through the sprites loaded in memory, generates large textures, packs them using

stb_rect_pack and sends them to VRAM, then frees the RAM memory of the pixel data.

It is important to note that all these approaches work well on a small scale but require fundamental optimizations in large projects where it isn't feasible to load all game textures on the VRAM at the same time. For a small 2D indie game like AV-Racer or Astrobion, though, where the VRAM never exceeds 1GB, we don't need to worry about that kind of optimization that requires dynamic texture streaming needed for larger games. That said, I still needed to occasionally unload textures in the game, like when the player switches from one map to the next. I had to implement the functionality of destroying textures loaded to VRAM. It would've been too much wasted VRAM to load all 24 maps into memory.

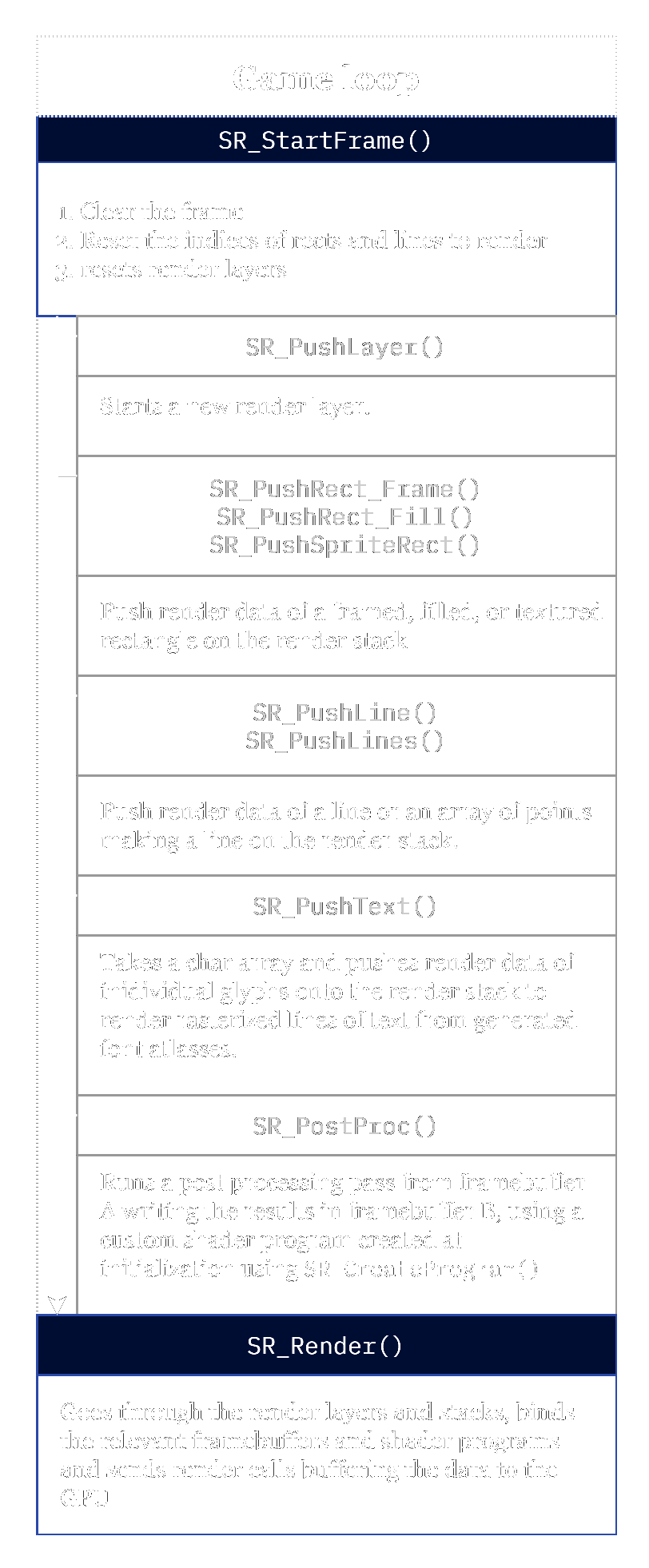

The game loop

Within the game loop the library fills lists of objects pushed for rendering every frame and resets the counter at the next frame. Here we can "push" render commands anywhere in the code onto a stack of commands that get executed at the end of the frame, where all the magic happens. Since this is a list, and the Z order of rendering is taken care of by the library (which may not have been the best decision), call order of these render commands is important. The library treats the screen like a canvas, first thing you tell it to draw is the first thing it does, the next thing gets drawn on top of it. I carried over this approach from SDL's renderer, though it would've given the user more freedom if they chose the order themselves by changing the Z themselves, a change I plan to add to the library in the future.

There are two key functions that need to wrap all render commands in between, one called at the start of the frame,

SR_StartFrame() and one at the end of the frame

SR_Render(). The former resets the counter on render commands and the latter executes these commands. Remember that SimplyRend doesn't use dynamic arrays, a static number of maximum primitives per frame is defined by the user at initialization and only the count number decides the defacto sizes of these arrays.

Here there are the usual calls to render common types of 2D shapes, rectangles, quads, lines, etc. Rectangles is one of the most common ones for 2D games and here the push call takes a struct with x, y, width and height data and converts it to 6 points as two triangles, then increments the counter for the list of render data of this type. This counter is used to tally how many elements to render this frame and gets reset at the start of the next frame.

Another abstracted process is the text rendering function

SR_PushText() which pushes singular lines of text for rendering by pushing a group of rectangles onto the render list. An improvement can be made on the function to support text wrapping which allows for paragraph rendering. Astrobion supports that but I didn't yet need it for AV-Racer.

One nice feature to have is post processing,

SR_PostProc() here you can use custom shader programs created at initialization to execute post processing passes. You just specify the shader program, the source, and the target buffers. This is possible by using

SR_SetTarget() to alternate between different framebuffers.

SR_SetTarget() is used in AV-Racer for example in the process of rendering the tire skid marks on the track. Here I set the render target to the track texture and render these lines on top of the texture without clearing, which accumulates the color in a cheap and nice looking way.

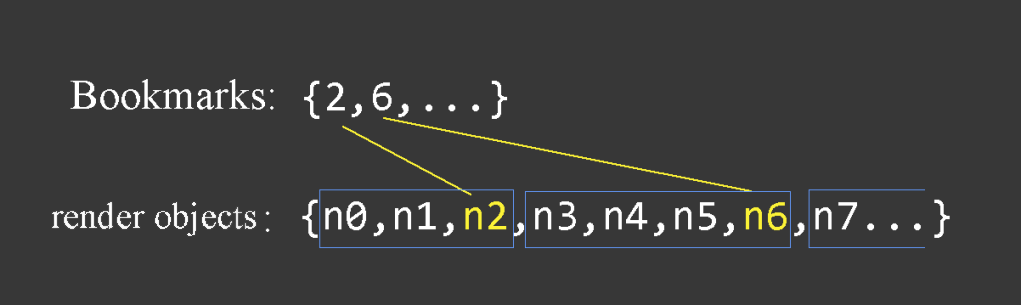

Bookmark arrays

When pushing render layers, we tell the library to separately send the last bunch of primitive data to the GPU. However, all the data of each type are stored in singular arrays. I.e. all rectangles are in one array, all lines are in one array. So instead of spawning a new array for each layer and dealing with dynamic array sizes, we stick to one array and use a bookmark system instead. Here for each of the render data arrays we have a second bookmark array. The bookmark array stores the index numbers of data groups at which each render layer ends. So

rects_bookmark[0] == 2 in this following example, which means that the list of data of render layer 1 ends at the element

n2.

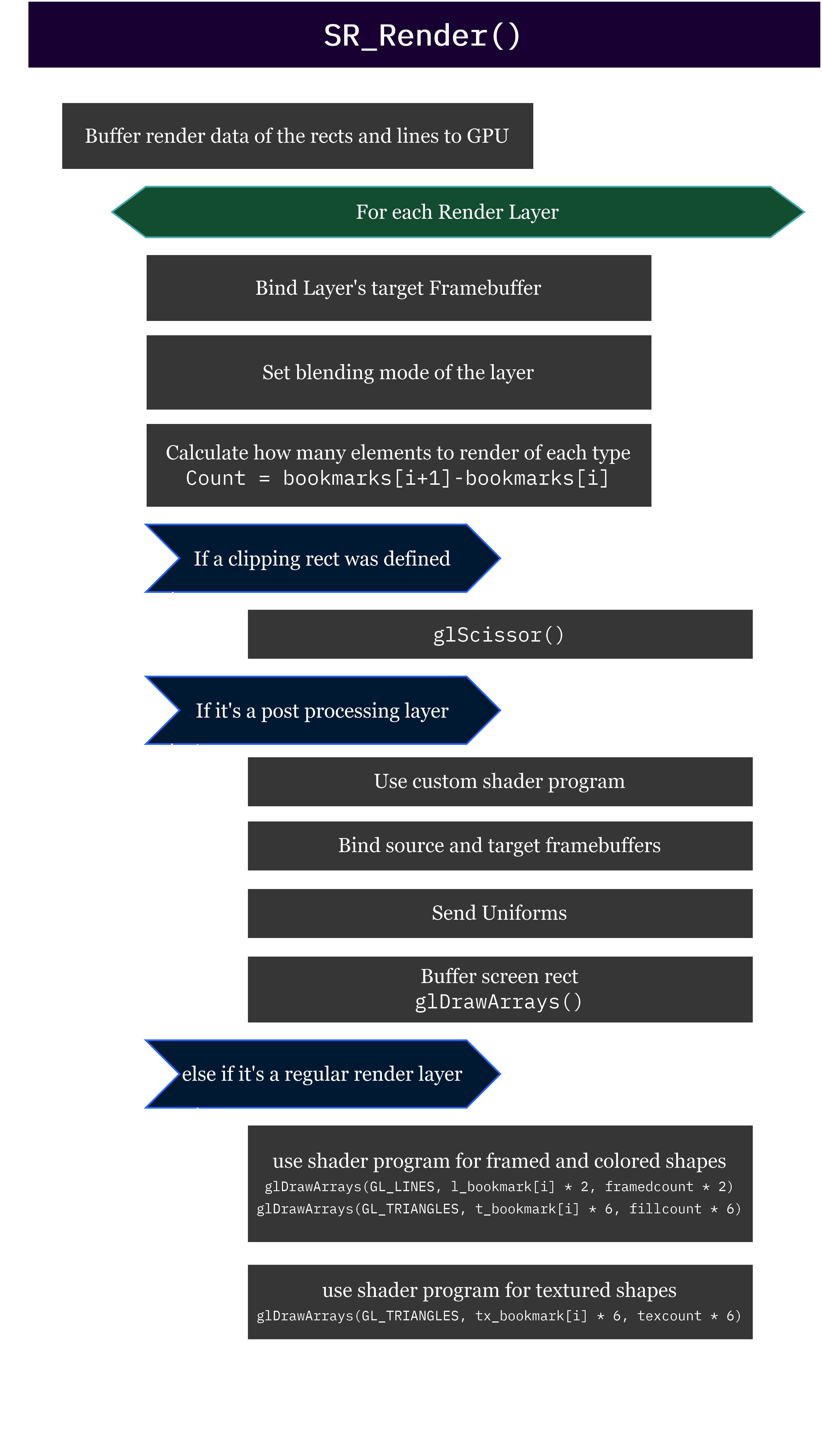

At the end of the game loop, we call the function

SR_Render(). Here is where the we go through all the commands we have accumulated and send them to the GPU. Let's go through it:

First we buffer all the rendering data of each render array list from element 0 to the count accumulated by the end of the frame. Then we start a loop going through all the render layers we have been pushing during this frame. Since every layer has a target frame buffer, we bind it. Then set the layers blending mode and calculate how many primitives we have for this particular layer using the bookmarks. Some counts can be zero here, a layer can also have no primitives to send, as is the case with post processing calls. Which are handled internally as render layers.

If a layer has a clipping rect this is specified next, then the algorithm can branch either into a regular rendering layer or to a post processing call. In post processing calls, we use the custom shaders and attached uniforms specified by the user at initialization, the function here dereferences the pointers assigned to the uniforms and sends the data to the GPU. Then a the frame rectangle gets buffered and a post processing pass gets applied using a draw call.

If the layer is a regular render layer with primitives to draw, we here use the two default shaders. We then use the bookmarks with the draw calls to specify which indices within the array of render data we want to render in this call. This is done for colored triangles and textured triangles, which draw the rectangles, and for lines.

After going through the layers, it is left to the user per their window management to swap the buffers and that ends the frame.

Final thoughts

SimplyRend is small and limited in scope but it helps get a 2D project up and a running using a robust groundwork that allows expansion. Also, having all the render algorithms packed in one function

SR_Render() makes changes and updates to the API easier to approach.

There was a lot of things I learned in the process of writing this. A lot of the functions can be abstracted and better implemented to allow more control to the user, I have mentioned a few of these points in the paragraphs above. Such as giving the user control over the Z render ordering, allowing raw primitives like simple quads and triangles, abstracting render layers to general render commands with state structs passed, generalizing algorithms with less hard-coded special cases, and supporting more dynamic texture loading. These improvements are likely to be added as I further develop Astrobion, but as of the time this article was written, this is the way SimplyRend was implemented and shipped with AV-Racer.

Thanks for reading!

-Wassim